We’ll never live in the Smart Cities of tomorrow if we continue to rely on the technologies and infrastructure of yesterday.

Data has surpassed Oil as the world’s most valuable asset. 3 out of 4 jobs will soon require some level of technology skills – and children in disadvantaged areas without access to the latest technology will continue to fall further behind – the digital divide. This has major implications for Smart Cities initiatives.

At the recent Colorado Smart Cities Symposium, we learned of the growing social divide due to lack of broadband access, with only around 60% in the Denver area currently having access. City population growth only exacerbates the problem, with over 400,000 new residents arriving in the past 6 years.

According to James Thurston, Managing Director of SmartCities4All, while Smart Cities initiatives aim to provide social inclusion, most are actually increasing the digital divide, due to lack of focus around accessibility, particularly as it relates to accessibility for persons of disability and older persons.

In order to create smart cities and regions, CIOs need to leverage data and technology while also implementing programs that promote community engagement to help make citizens’ lives better and boost economic development. Many Smart Cities aim to leverage real time open data driven solutions and take advantage of disruptive new technology in transport, communications and energy efficiency, to grow the economy. However, according to Gartner, 30% of smart city initiatives will be discontinued due to lack of integrated services and data analysis, by 2023.

“Smart city platforms need to extend beyond just IoT platforms to deliver full solutions that encompass citizen engagement and experience, ecosystems, data analytics and AI.” (Source: Gartner Predicts 2019: Smart Cities)

While infrastructure such as fast, reliable and affordable broadband internet is key, it’s also essential to be able to make effective and efficient use of the many sources of data available to feed a Smart City project, and integrate these sources into smart, personalised digital experiences. For example, “Ten Gigabit Adelaide” has been rightfully seen as a game changer, helping make a somewhat isolated city, struggling to attract investment, tranform itself to be globally competitive. As Peter Auhl, the driving force behind the initiative, commented: “This world first infrastructure is showing the impact digital infrastructure can have on an economy and a city”.

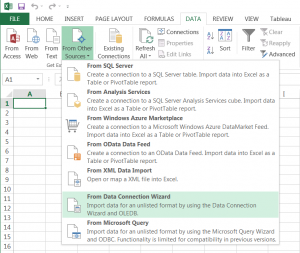

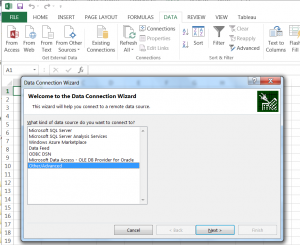

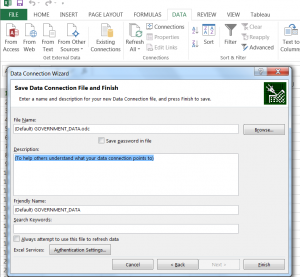

Yet many Smart City CIOs are still struggling to pull together all the disparate sources of data (IoT, Open Government Data, internal data, 3rd party data e.g. Strava data, Weather data, sentiment data, survey data etc) and present it in a seamless digital experience for internal and external users.

In an ideal world, integrated digital experiences – for citizens, agencies, employees would just work – securely, seamlessly, functionally, and with delight. But they don’t. Instead, most Smart Cities have a growing problem of too many systems and silos, redundant or overlapping tools and data, and compounding communication challenges because of the “systems of chaos”.

No Smart City is immune to this challenge – and all Smart City CIOs wish vendor to vendor systems worked better together.

This is where AppFusions can help. The AppFusions AlohaCloud platform addresses these issues by providing dynamic, real-time, collaborative digital experiences incorporating enterprise-grade blockchain (including seamless SSO/authentications where required).

The AlohaCloud platform is unique in that while addressing the Data side of the equation through deep integrations for realtime IoT data, Open Data etc, it also incorporates a full Digital Workplace (DWP) for Collaboration.

This provides data-driven, fact-based collaboration with context. Particularly in the current environment of “fake news” and alternative facts, it’s now more important than ever for Smart Cities initiatives to be accountable, measurable, auditable.

Smart Cities need to become ‘collaborative, innovative problem solvers’. As Professor Gary Hamel commented: “The problem with the future is that it is different. If you are unable to think differently, the future will always arrive as a surprise”.

A great example of “thinking differently” is the US Department of Energy in Idaho, which has a vision to provide clean, secure connected transportation, addressing critical zero-emission transportation system challenges (e.g. grid capabilities and charging strategies). The 10-20 year strategic objective is to demonstrate Autonomous, Connected, Electrified and Shared mobility transportation solutions. A short term goal is to provide a bus electrification management system digital experience, engaging everyone from passengers and drivers to fleet planners, operators and manufacturers. AlohaCloud is the digital experiences platform powering this visionary initiative.

We will never have sustainable, vibrant and future-proofed local economies unless we embrace digital transformation to equip our local workforces and communities with the skills they need to for the future. AlohaCloud enables this digital transformation, bridging the digital divide. Making Smart Cities smarter.

We’ll never live in the cities of tomorrow if we continue to rely on the technologies and infrastructure of yesterday.